Child Sexual Abuse Material (CSAM) is a material (images, videos, audio, text or other forms) which depicts children in a sexually explicit act or conduct, depicting children in an obscene or indecent or sexually explicit manner or material where children are sexually abused. CSAM is also called child pornography interchangeably. The Internet is a largely unsupervised, unregulated and anonymous domain leveraged by bad elements of society to achieve their devilish objective. Online safety of children and ensuring their mental health has become a serious topic of discussions for policymakers across the world. CSAM prevalence in online media is one such menace or dark side of the internet. CSAM material can be found in social media platforms like Twitter, Snapchat, Facebook, Instagram and many other, peer-to-peer networks and dark web. Global demand for the CSAM is also growing and it has emerged as a multi-billion dollar industry .

Countries all around are taking steps to prevent the circulation of CSAM and also investigate the cases where CSAM is already available in online platforms. India is also working on it but with a fragmented approach. Recently, on 16th May 2023 National Human Rights Commission (NHRC) took suo-motu cognisance of the media reports on the plethora of content on child sexual abuse material circulation on the internet and social media platforms, which increased by 250% to 300% on social media. NHRC issued a notice to the Centre, states and other investigation agencies like National Crime Record Bureau asking for a report within six weeks detailing the steps they took to tackle and prevent this menace on social media. NHRC justified its suo-motu action because it amounts to human rights violations relating to citizens' life, liberty and dignity and the protection of young children from the dangers of their sexual abuse material on social media. NHRC conducted multiple national-level conferences on Child Sexual Abuse Material(CSAM).

The Gravity of the Situation

In India, CSAM circulation online increased exponentially during the lockdown. Lockdown offered opportunities to dark elements of the society, like paedophiles and those who were involved in the production and distribution of such content online, to increase their activity and exploit children. National Centre for Missing & Exploited Children (NCMEC), a US-based non-profit organisation, launched an initiative called CyberTipline to report CSAM on online platforms from the USA and worldwide. In 2022 CyberTipline received more than 32 million reports of suspected child sexual exploitation, out of which 99% of reports were received from (Electronic Service Providers) ESPs. Among countries who submitted a report five ESPs constituted 90% of it (Facebook, Instagram, Google, WhatsApp and Omegle). Facebook reported the highest cases.

And according to this report, India is the largest contributor to CSAM found online; the CSAM uploaded from India increased by 185.5% (From 1.98 million to 5.67 million CSAM)from 2019 to 2022. There was a 72% increase from 2020 to 2021, and it is just the tip of the iceberg. This menace with time has emerged as an organised business and well-organised gangs are involved in it.

In the USA online platforms require Electronic Service Providers(ESPs) under federal law 18 USC 2258A of the USA to report instances of apparent child pornography that they become aware of on their system to CyberTipline. Similarly Canada also has a mechanism to report and tackle CSAM.

As CSAM is an organised crime spread in various countries it requires a calibrated and coordinated approach at a global level.

India Against CSAM

India lacks proper technical and legislative tools to tackle the prevalence of CSAM on online platforms. Information Technology (Intermediaries Guidelines and Digital Media Ethics Code), makes it mandatory for significant social media intermediaries to publish a monthly compliance report mentioning the details of complaints received and action taken on the complaints as well as the content removed by them proactively. However, with the recents reports by significant social media intermediaries it is evident that the platforms failed to implement it into true spirit. There is no uniformity in the compliance report published by these platforms as Google and WhatsApp have not categorised issues where they have taken proactive measures but published the total number of proactive actions taken. On the other side Twitter has identified two categories under which it took proactive actions, one of them is CSAM as opposed to ten categories identified by Facebook and Instagram. Compliance reports of these intermediaries also indicated a low level of awareness over the issue as more than 95% of the actions taken by them on CSAM was proactive and not reported by users

Legislative Tool to Tackle CSAM in India

In India three legislations which specifically relate to child pornography:-

Information Technology Act, Indian Penal code, Protection of Children from Sexual Offences Act, criminalizes child sexual abuse or child pornography.

1: Section 67B of Information Technology Act lays down :-

Punishment for whoever, –

(a) publishing or transmitting material in any electronic form which depicts children engaged in sexually explicit act or conduct; or

(b) creates text or digital images, collects, seeks, browses, downloads, advertises, promotes, exchanges or distributes material in any electronic form depicting children in obscene or indecent or sexually explicit manner; or

(c) cultivates, entices or induces children to online relationship with one or more children for and on sexually explicit act

(d) facilitates abusing children online, or

(e) records in any electronic form own abuse or that of others pertaining to sexually explicit act with children,

2: Section 292(2) of India Penal Code punishes those who sell, distribute or publicly exhibiting or in any many put in circulation any obscene object.

Section 293 criminalises selling, distributing , exhibiting or circulation of such obscene objects to young persons under the age of twenty years.

3: Section 13, Section 14 and Section 15 of the The Protection of Children from Sexual Offences Act, 2012 (POCSO), criminalises offences where a child is used for pornography purposes. It also criminalises storage of its child pornography material in any form or the use of child for the purpose sexual gratification like representation of sexual organs of a child, usage of child in a sexual acts or indecent or obscene representation of a child.

The Legislature of India occasionally took a matter for discussion but that wasn’t taken seriously.

Rajya Sabha’s Ad Hoc Committee presented a report to ‘Study the Alarming Issue of Pornography of Social Media and its Effect on Children and Society as a Whole’ in 2020 under the Chairmanship of Sh. Jairam Ramesh. Some measures recommended by the committee are:

1: Specify a national portal under reporting requirements of POCSO Act, 2012

2: Preparing code of conduct for stricter adherence by social media platforms

3: Requiring intermediaries operating in India to report CSAM matters to India and not just foreign authorities.

4: Requiring Internet Service Providers to proactively monitor and take down CSAM

5: Permit breaking of end-to-end encryption to trace distribution of child pornography

6: Devise age verification mechanism and restrict access to children

Enforcement agencies were at the forefront in dealing with the menace of CSAM for example CBI, state agencies etc. CBI established Online Child Sexual Abuse and Exploitation(OCSAE) prevention/ investigation unit to register cases pertaining to CSAM only with interpol reference. It also carried out operations like ‘Megh Chakra’ and ‘Operation Carbon’ in 2021. CBI is a nodal agency to coordinate with Interpol which provides links to crimes related to CSAM.

Kerala police proactively engaged in covert operation called 'P-Hunt' to hunt down those involved in child sexual exploitation.Only few states are taking proactive actions in this regard.

Big techs are also taking steps to counter CSAM prevalence like, Meta collaborated with NCMEC to use tool ‘Take it Down’, to help platform users to proactively prevent the spread of explicit or nude images of young people and minors.

The circulation of CSAM causes irreparable psychological damage to children and hinders their development. The issue of child sexual abuse needs to be taken seriously and urgently. Awareness is the key to preventing CSAM, as it was found that most of the CSAM is self-generated in India.

What is the Way Ahead ?

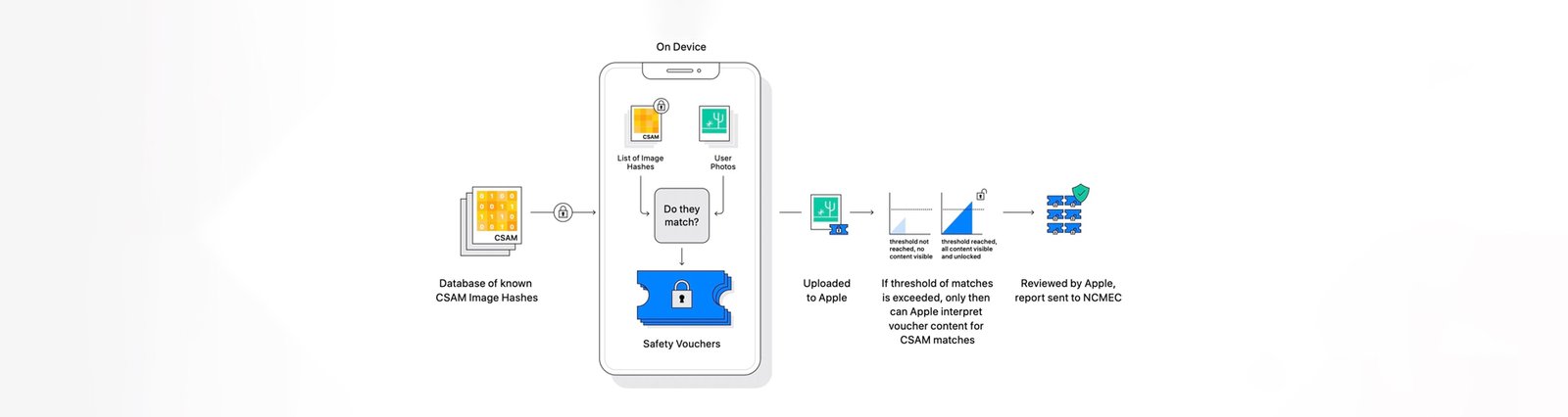

With many technological advances, it is necessary to counter the harms caused by technology with the help of technology itself. Tackling the CSAM can be achieved through the development of digital forensics and artificial intelligence, as currently, hash technology is used to remove CSAM material from various platforms. In the USA, Electronics Service Providers are required to report instances of 'apparent child pornography' to CyberTipline, when they become aware of it.

Steps India Can Take:-

1: By various reports it was visible that most of the reports were reported by platform themselves. This indicates the low level of awareness regarding CSAM and methods to report them. Hence, urgent need to organise campaigns and conferences at regional level to raise awareness about the issue.

2: Despite every platform publishing compliance reports in India, it lacks uniformity as not every platform is publishing categorised reports of issues on which actions were taken. For instance Twitter clearly says that it took action on CSAM with data but WhatsApp does not. Therefore it is to specify a uniform approach for these online platforms.

3: The need to designate a nodal body to look after the protection of children online just like eSafety Commissioner in Australia.

4: Absence of automatic electronic monitoring system impairs the efforts to detect CSAM algorithmically therefore it is crucial to enhance digital forensic development and technological solutions to effectively detect circulation of CSAM on online platforms.

5: Big techs shall be made accountable for hosting offensive content to incentivize them to take proactive measures to crackdown on CSAM prevalence on their platforms. They also share data, model and process with peers and other companies, to effectively take down content from every platform.

6: Indian investigation agencies should integrate with global platforms to increase efficiency and investigate matter effectively.

7: Creating India’s database of CSAM online hash value which can be referred by intermediaries to block CSAM content, it will control the damage and deal with the case efficiently. India is mostly dependent on inputs from outside, foreign agencies, India must devise its own methods.

8: Equipping and training enforcement agencies with effective tools and techniques to detect, investigate and monitor CSAM activities.

9: Create a dedicated national hotline to report crimes related to children especially for CSAM, National Cyber Crime Reporting platform is there but, platform has to be dedicated to prevent CSAM.

As the internet is borderless so should be the approach, the menace of CSAM is global so the effort to tackle it also needs to be global and coordinated.